In final part of my multipart article on converting my Murdered by Midnight Board Game to a Mobile Game App using Swift and SwiftUI, I’ll cover supporting Music, Sound Effects & Voice in my Multiplatform SwiftUI game.

Hopefully, other indie developers can find this information useful.

Index:

- Part 1 – Deals with converting a mockup to SwiftUI, handling different device sizes & screen ratios and displays screens as needed.

- Part 2 – Covers adding Game Center support to a SwiftUI app.

- Part 3 – Covers handling tvOS quirks in a SwiftUI app.

- Part 4 – Covers handling macOS Catalyst quirks in a SwiftUI app.

- Bonus Round – Covers handling Music, Sound Effects & Voice in a SwiftUI app.

Handling Background Music and Sound Effects

I knew I want to have background music in my game, along with the ability to play a few sound effects at the same time, over this music. Here is the common class that I cooked up to handle everything:

import Foundation

import AVFoundation

import SwiftUI

/// Class to handle playing background music and sound effects throughout the game.

class SoundManager: NSObject, AVAudioPlayerDelegate {

typealias FinishedPlaying = () -> Void

// MARK: - Enumerations

/// Defines which channel the sound effect will be played through.

enum SoundEffectChannel:Int {

/// Play through channel 1.

case channel01 = 0

/// Play through channel 2.

case channel02 = 1

}

// MARK: - Static Properties

/// Defines the common, shared instance of the Sound Manager

static var shared:SoundManager = SoundManager()

// MARK: - Properties

/// Global variable that if `true`, background music will be played in the game.

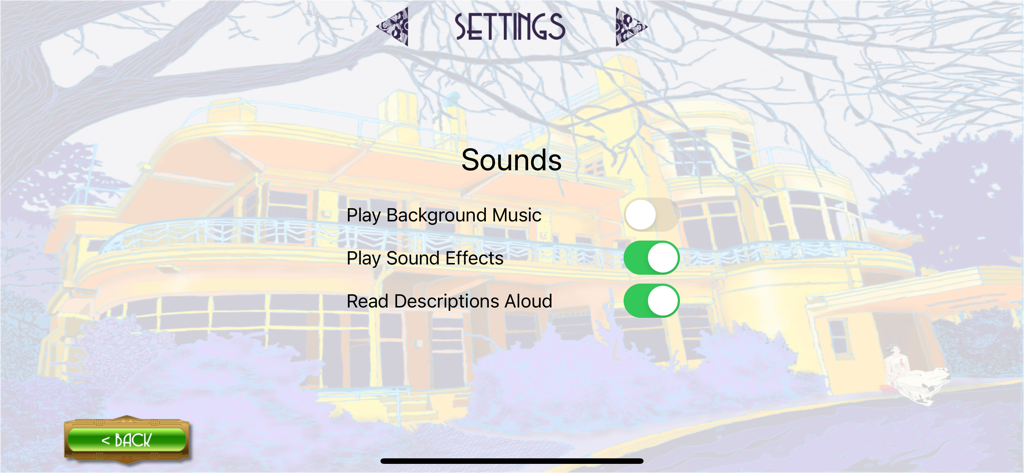

@AppStorage("playBackgroundMusic") var shouldPlayBackgroundMusic: Bool = true

/// Global variable that if `true`, sound effect will be played in the game.

@AppStorage("playSoundEffects") var shouldPlaySoundEffects: Bool = true

/// The `AVAudioPlayer` used to play background music.

var backgroundMusic:AVAudioPlayer?

/// The sound currently being played in the background music channel.

var currentBackgroundMusic:String = ""

/// The `AVAudioPlayer` used to play room specific background music.

var backgroundSound:AVAudioPlayer?

/// The `AVAudioPlayer` used to play the first channel of sound effects.

var soundEffect01:AVAudioPlayer?

/// The `AVAudioPlayer` used to play the second channe; of sound effects.

var soundEffect02:AVAudioPlayer?

/// The delegate used to handle events on the first channel of sound effects.

private var soundEffectDelegate01:SoundManagerDelegate? = nil

/// The delegate used to handle events on the second channel of sound effects.

private var soundEffectDelegate02:SoundManagerDelegate? = nil

// MARK: - Functions

/// Starts playing the background music for the given audio file. If the sound is already playing, it will not be restarted. The sound provided will loop forever until `stopBackgroundMusic()` is called.

/// - Parameter song: The sound (with extension) to be played.

func startBackgroundMusic(song:String) {

guard shouldPlayBackgroundMusic else {

return

}

// Is the song already playing?

guard currentBackgroundMusic != song else {

return

}

if let backgroundMusic = backgroundMusic {

if backgroundMusic.isPlaying {

stopBackgroundMusic()

}

}

let path = Bundle.main.path(forResource: song, ofType:nil)

if let path = path {

let url = URL(fileURLWithPath: path)

do {

currentBackgroundMusic = song

backgroundMusic = try AVAudioPlayer(contentsOf: url)

backgroundMusic?.volume = 0.30

backgroundMusic?.numberOfLoops = -1

backgroundMusic?.play()

} catch {

print("Unable to play background music: \(error)")

}

} else {

print("Unable find background music: \(song)")

}

}

/// Thapls the room specific background music. The sound provided will loop forever until `stopBackgroundSound()` is called.

/// - Parameter sound: The sound (with extension) to be played.

func playBackgroundSound(sound:String) {

guard shouldPlayBackgroundMusic else {

return

}

if let backgroundSound = backgroundSound {

if backgroundSound.isPlaying {

stopBackgroundSound()

}

}

let path = Bundle.main.path(forResource: sound, ofType:nil)

if let path = path {

let url = URL(fileURLWithPath: path)

do {

backgroundSound = try AVAudioPlayer(contentsOf: url)

backgroundSound?.play()

} catch {

print("Unable to play background music: \(error)")

}

} else {

print("Unable find background music: \(sound)")

}

}

/// Stops the currently playing background music.

func stopBackgroundMusic() {

backgroundMusic?.stop()

currentBackgroundMusic = ""

backgroundMusic = nil

}

/// Stops the currently playing room specific background music.

func stopBackgroundSound() {

backgroundSound?.stop()

backgroundSound = nil

}

/// Plays the given sound effect on the given effect channel.

/// - Parameters:

/// - sound: The sound (with extension) to be played.

/// - channel: The effect channel to play the song on. The default is `channel01`.

/// - didFinishPlaying: The closure that will be called when the sound finishes playing.

func playSoundEffect(sound:String, channel:SoundEffectChannel = .channel01, didFinishPlaying:FinishedPlaying? = nil) {

guard shouldPlaySoundEffects else {

if let didFinishPlaying = didFinishPlaying {

didFinishPlaying()

}

return

}

let path = Bundle.main.path(forResource: sound, ofType:nil)

if let path = path {

let url = URL(fileURLWithPath: path)

do {

switch(channel) {

case .channel01:

soundEffectDelegate01 = SoundManagerDelegate(action: didFinishPlaying)

soundEffect01 = try AVAudioPlayer(contentsOf: url)

soundEffect01?.delegate = soundEffectDelegate01

soundEffect01?.play()

case .channel02:

soundEffectDelegate02 = SoundManagerDelegate(action: didFinishPlaying)

soundEffect02 = try AVAudioPlayer(contentsOf: url)

soundEffect02?.delegate = soundEffectDelegate02

soundEffect02?.play()

}

} catch {

print("Unable to play sound effect: \(error)")

}

} else {

print("Unable find sound effect: \(sound)")

}

}

}

/// Delegate that handles a sound finishing playing on one of the sound effect channels.

class SoundManagerDelegate: NSObject, AVAudioPlayerDelegate {

typealias FinishedPlaying = () -> Void

// MARK: - Properties

/// The closure that gets called when the sound finishes playing.

var finishPlaying:FinishedPlaying? = nil

// MARK: - Initializers

/// Creates a new instance of the object with the given parameters.

/// - Parameter action: The closure that gets called when the sound finishes playing.

init(action:FinishedPlaying?) {

// Initialize

self.finishPlaying = action

}

// MARK: - Functions

/// Function called when the sound finishes playing

/// - Parameters:

/// - player: The `AVAudioPlayer` that was playing the sound.

/// - flag: If `true`, the sound played successfully.

func audioPlayerDidFinishPlaying(_ player: AVAudioPlayer, successfully flag: Bool) {

if let finishPlaying = finishPlaying {

finishPlaying()

}

}

}All playback is handled by a standard AVAudioPlayer. Here’s what all of the functions in the class do:

startBackgroundMusic(song:String)– Play the give music on a loop if the user wants to hear background music and the given song is not already playing.playBackgroundSound(sound:String)– Layers a background sound on top of the music that does not loop.stopBackgroundMusic()– Instantly stops any background music.stopBackgroundSound()– Instantly stops any background sound effect.playSoundEffect(sound:String, channel:SoundEffectChannel = .channel01, didFinishPlaying:FinishedPlaying? = nil)– Plays the given sound effect on the given channel. UsedidFinishPlayingto take an action after the effect ends.SoundManagerDelegate– Handles a sound effect finishing playing.

When I use this class in the game, I make a call against the shared instance:

import SwiftUI

import SwiftletUtilities

import GameKitUI

import GameKit

struct StartGameView: View {

@ObservedObject var dataStore = MasterDataStore.sharedDataStore

...

var body: some View {

GeometryReader { geometry in

ZStack(alignment: .topLeading) {

...

}

.ignoresSafeArea()

}

.ignoresSafeArea()

.onAppear {

SoundManager.shared.startBackgroundMusic(song: "AnechoixJazzLoop.mp3")

}

}

}Handling Speech Synthesis

To make the game a little quirky and different, I knew I wanted to have each character’s part read aloud. While it would have been nice to have gotten a bunch of voice actors to record all of the different parts, my limited budget just didn’t allow for it.

So I decided to see how far I could push Siri’s text-to-speech functionality. With a little experimentation, I was able to get a couple of different voices programmatically to add a little interest.

Here’s the shared library I ended up creating to handle everything:

import Foundation

import AVFoundation

import SwiftUI

class SpeechManager {

// MARK: - Static Functions

static var shared:SpeechManager = SpeechManager()

// MARK: - Enumerations

public enum VoiceLanguage: String {

case arabicSaudiArabia = "ar-SA"

case czechCzechRepublic = "cs-CZ"

case danishDenmark = "da-DK"

case germanGermany = "de-DE"

case greekModernGreece = "el-GR"

case englishAustralia = "en-AU"

case englishUnitedKingdom = "en-GB"

case englishIreland = "en-IE"

case englishIndia = "en-IN"

case englishUnitedStates = "en-US"

case englishSouthAfrica = "en-ZA"

case spanishMexico = "es-MX"

case spanishSpain = "es-ES"

case finnishFinland = "fi-FI"

case frenchCanada = "fr-CA"

case frenchFrance = "fr-FR"

case hebrewIsrael = "he-IL"

case hindiIndia = "hi-IN"

case indonesianIndonesia = "id-ID"

case italianItaly = "it-IT"

case japaneseJapan = "ja-JP"

case koreanKorea = "ko-KR"

case dutchBelgium = "nl-BE"

case dutchNetherlands = "nl-NL"

case norwegianNorway = "no-NO"

case polishPoland = "pl-PL"

case portugueseBrazil = "pt-BR"

case portuguesePortugal = "pt-PT"

case romanianRomania = "ro-RO"

case russianRussianFederation = "ru-RU"

case slovakSlovakia = "sk-SK"

case swedishSweden = "sv-SE"

case thaiThailand = "th-TH"

case turkishTurkey = "tr-TR"

case chineseShina = "zh-CN"

case chineseHongKong = "zh-HK"

case chineseTaiwan = "zh-TW"

}

// MARK: - Properties

@AppStorage("speakText") var speakText: Bool = true

var speechSynthesizer:AVSpeechSynthesizer = AVSpeechSynthesizer()

// MARK: - Functions

/// Uses the default **Speech Synthesizer** to speak the given text aloud.

/// - Parameter text: The text to read aloud to the user.

func sayPhrase(_ text:String) {

guard speakText else {

return

}

let speechUtterance = AVSpeechUtterance(string: text)

speechSynthesizer.speak(speechUtterance)

}

/// Says the given phrase in the given language.

/// - Parameters:

/// - text: The text to speak.

/// - inVoice: The language to speak in.

func sayPhrase(_ text:String, inVoice:VoiceLanguage) {

guard speakText else {

return

}

let speechSynthesisVoice = AVSpeechSynthesisVoice(language: inVoice.rawValue)

let speechUtterance = AVSpeechUtterance(string: text)

speechUtterance.voice = speechSynthesisVoice

speechSynthesizer.speak(speechUtterance)

}

/// Stops any speech currently running.

func stopSpeaking() {

guard speakText else {

return

}

speechSynthesizer.stopSpeaking(at: .word)

}

}This code is really pretty simple, the only thing I did “special” was to take all of the languages that can be spoken on iOS and add them to an enum (VoiceLanguage) to make them a little more “human readable”.

In the game I used it like this:

.onAppear{

DispatchQueue.main.async {

if dataStore.dontAnnounce {

dataStore.dontAnnounce = false

} else {

let person = dataStore.getCharacter(id: dataStore.lastConversation.avatar)!

if dataStore.lastConversation.sex == .female {

switch(person.nationality) {

case .irish:

SpeechManager.shared.sayPhrase(dataStore.lastConversation.text, inVoice: .englishIreland)

case .german:

SpeechManager.shared.sayPhrase(dataStore.lastConversation.text, inVoice: .germanGermany)

case .british, .french:

SpeechManager.shared.sayPhrase(dataStore.lastConversation.text, inVoice: .englishSouthAfrica)

default:

SpeechManager.shared.sayPhrase(dataStore.lastConversation.text, inVoice: .englishUnitedStates)

}

} else {

switch(person.nationality) {

case .british, .scottish:

SpeechManager.shared.sayPhrase(dataStore.lastConversation.text, inVoice: .englishIndia)

case .french:

SpeechManager.shared.sayPhrase(dataStore.lastConversation.text, inVoice: .frenchFrance)

default:

SpeechManager.shared.sayPhrase(dataStore.lastConversation.text, inVoice: .englishUnitedKingdom)

}

}

}

}The only part that is of real interest here, is that I had to call speech synthesis on the main thread or it caused the app to go crazy.

Support

If you find this useful, please consider making a small donation:

It’s through the support of contributors like yourself, I can continue to create useful articles like this one and continue build, release and maintain high-quality, well documented Swift Packages for free.

Closing

For those of you who stuck with me through the entire article, thanks! And I hope you did find some useful information.